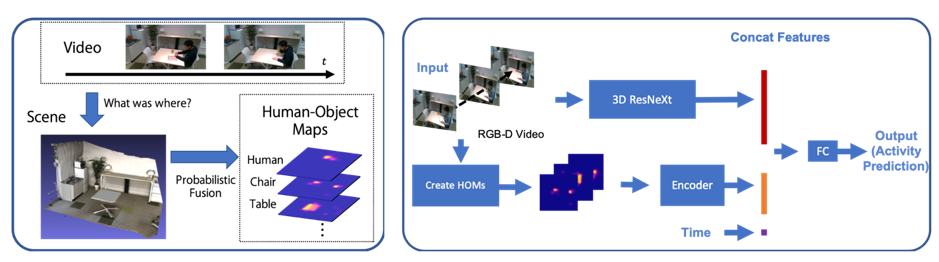

Daily activity recognition using human-object probability maps

Daily activities that occurs for several minutes to hours often includes several primitive actions which hinders action recognition from video inputs. In this research we observe “what”, “where”, “when”, and “how” humans performed daily activities and used these features to better recognize these daily activities. We introduced Human-Object Maps (HOMs) which represents probability maps of where humans and objects were and used these features for activity recognition. We evaluated the effectiveness of these maps using a dataset we have created which consists of several daily activities performed throughout the lab.

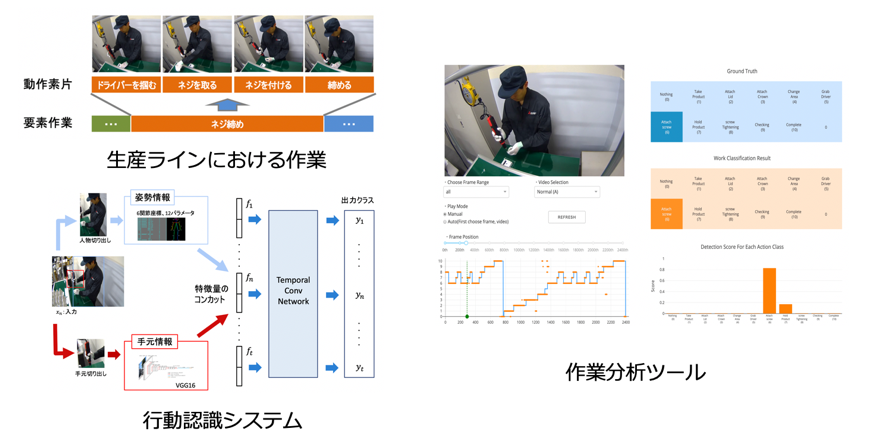

Fine-grained action segmentation for industrial production line

In this research, we aim to tackle the fine-grained action recognition task using working videos taken in product line scenes. Unlike general datasets for action recognition, our working dataset consists of fine-grained actions, which worker only uses hands and arms. This causes the difficulty to detect frame wise action using RGB image as the input for each frame. To tackle this problem, we focused on combining pose features and hand features. Pose features are for capturing the movements of arms, and hand features are for capturing the movements of hands and also gaining the information of which tool the worker is using. By using our original dataset, we showed that are model are able to secure high recognition rate, and also robust to the variety of environments and workers.

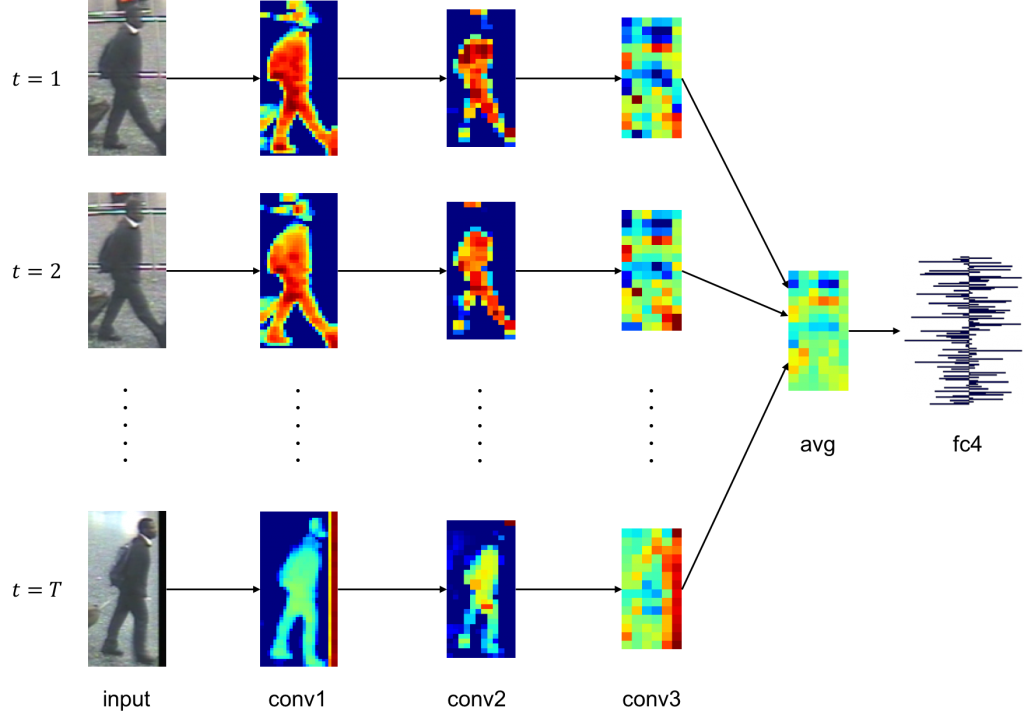

Human re-identification by distance learning using CNN

We proposed a new method to re-identify people by learning the similarity of people in moving images by a convolution neural network. Feature-extraction is carried out on each moving human image by a convolution neural network, and the distance between embedding vectors is mapped to Euclidean space so as to directly correspond to the distance indicator between people. Update of parameters is carried out once having taken into account all triplet groups that can be taken within the mini-batch by an improved parameter learning method called Entire Triplet Loss . The generalization performance of the network was greatly improved by such a simple change of the parameter updating method, and the embedding vector was more easily separated for each person. In evaluation experiments, the method achieved the most advanced re-identification rate for an international data set.

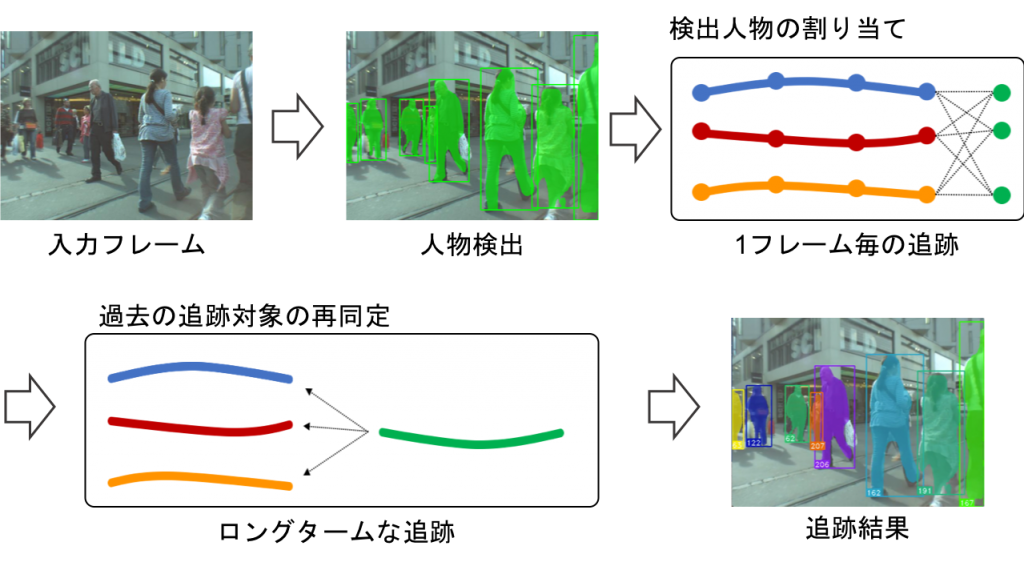

On-line multiple object tracking using re-identification of tracking trajectory

Many existing methods of tracking multiple objects by on-line processing adopt a tracking-by-detection approach that chronologically assigns object rectangles obtained by performing object detection in every frame of a moving image. However, existing methods could not track objects that were not detected by the object detector due to masking or the like. Therefore, we propose a method to transfer a lost object to a tracking state once again by re-identification of the tracking trajectory. Embedding vectors expressing the high dimensional appearance features of the object are acquired using a convolution neural network and re-identification of the tracking trajectory is carried out according to the distance of the embedding vector between tracking trajectories. At this time, using the mask image of the object obtained by area division as the input of the network enables robust re-identification determination with respect to change in background. Furthermore, since the determination of the re-identification of the tracking trajectory pair is performed based on the distance between low-dimensional vectors, the increase in calculation cost due to the determination is small.

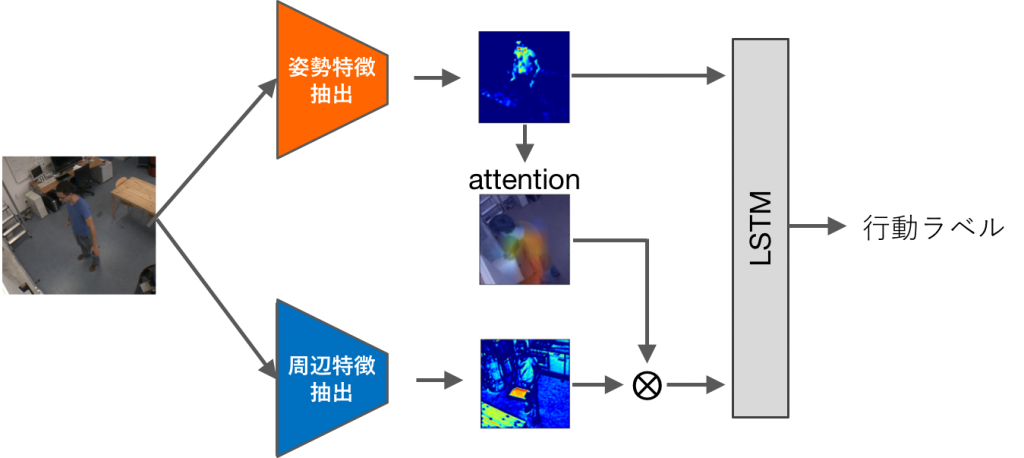

Time sequence behavior recognition in a behavior transition video

In this study, we considered the issue behavior recognition conducted on moving images in which multiple actions are continuously transitioning, and proposed various time series analyses using hierarchical LSTM. In addition, we proposed the effective use of peripheral features by incremental learning of peripheral information and filtering of peripheral features by posture characteristics centered on posture information robust to environmental change. We obtained an improvement over the conventional method for behavior recognition tasks conduced on behavior transition video using a data set.

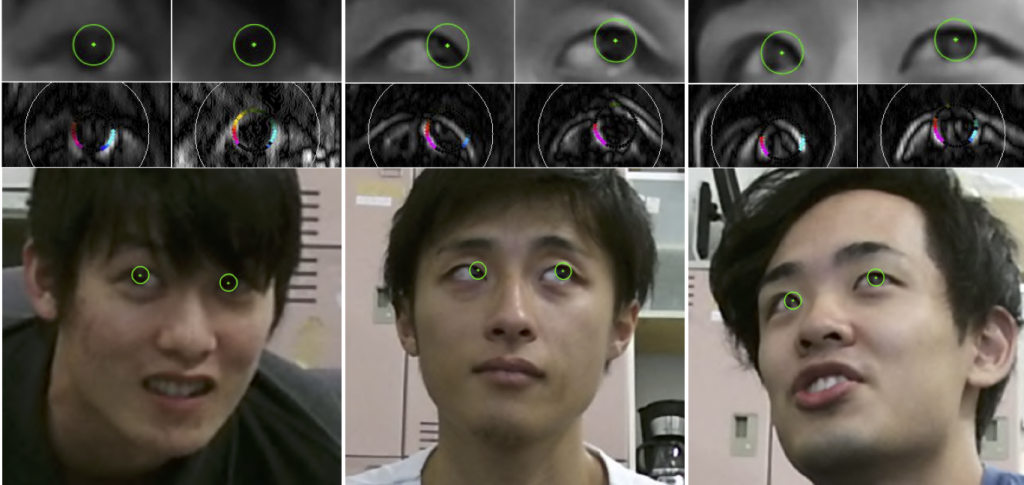

Calibration free gaze estimation

Many existing gaze estimation methods use special equipment such as infrared LEDs and distance sensors, or require prior calibration work. In this study, we propose a calibration-free gaze point estimation method for cameras that can be used with a wide range of head positions, in order to realize a gaze estimation method suitable for practical use in society. Based on a robust iris tracking method independent of resolution, we demonstrate the possibility of realizing calibration-free gaze estimation in an extensive space using a gaze estimation method consisting of facial feature point detection, iris tracking, and gazing point estimation. We aim to apply this to various fields.